Our Graphics and Vision group within the DMTLab at BCU focuses on synthetic image generation, applied artificial intelligence, digital twins, animation and computational geometry. We teach computers to interpret, create and animate visual or multi-sensory data. This research encompasses capture, modelling, simulating and display of a multisensory virtual representation of environments.

Spanning a vast array of computer graphics techniques and processes we adopt rigorous mathematical analysis, signal processing and inspiration from human-centred perception and attention. This is all addressed into application areas such as digital manufacturing, imaging, scientific computing, and animation.

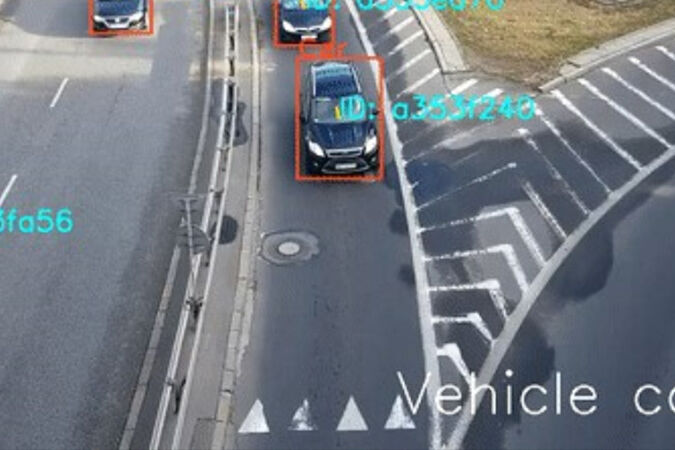

The researchers in our team are world leading and deliver on creating state-of-the-art systems to better detect and label objects, people, scenes, context and behaviours. These novel advancements in hardware, software, algorithms and human factors are then applied in areas such as healthcare, gaming and simulation systems. These are intrinsic fundamental applications that enrich human experience, immersion and presence in digital content. Our unique paradigm to tackle this field of research is to realise the symbiotic relationship between theory and practice, coupled with a plethora of insight into human perception.

Areas of Activity

- Computer Graphics

- Game Science

- Visual Effects

- Animation

- Motion Capture

- Computational Geometry

- Virtual Environments

- Semantics and Context Awareness

- Procedural Generation

- Natural Phenomena Simulation

- Rendering

- Perception and Attention

- Applied Artificial Intelligence / Machine Learning / Deep Learning

- High Dynamic Range Imaging

- Parallel Computing

- Serious Games

Staff working in this group

- Carlo Harvey (Group lead)

- Alan Dolhasz

- Mathew Randall

- Lianne Forbes

- Cathy Easthope

- Sandeep Chahil

- Jake O’Connor

- Nathan Dewell

- Richard Davies

Research Students

- Mattia Colombo