Smart reading glasses

Team Op-Tech

Five Computer Science students, Hamza, Marley, Mohamed, Mohammed and Rabia, have teamed together to create Op-Tech - a handy pair of Smart Reading Glasses that help those with difficulties reading to translate what is in front of them.

Give us a brief overview of your project

We're making a pair of glasses that help blind and partially sighted people, as well as those who may struggle to read due to dyslexia. Whilst wearing the glasses, if you're reading any text, such as a book or a menu for example, it will take a picture, and then it will run it through an API and convert it into text which will convert to speech. Essentially, this is a handy text-to-speech invention but within a pair of glasses.

What made you want to develop this idea?

We had a lot of fun ideas when we started brainstorming, but we knew we wanted to create something to help assist people with disabilities. Initially, we wanted to incorporate sign language into our project, such as attempting to convert physical sign language into text, but the glasses seemed like a more straightforward solution. We had researched existing eyewear on the market that produced a similar outcome, but they were all costly. We knew we had to create an accessible and affordable option.

Why is there a need for what you’re creating

We think reading should be accessible to everyone, and this project should make this accessible to do in the comfort of your own home. We felt that if we could find an easier way to make this happen, it could be used across the market – even in schools.

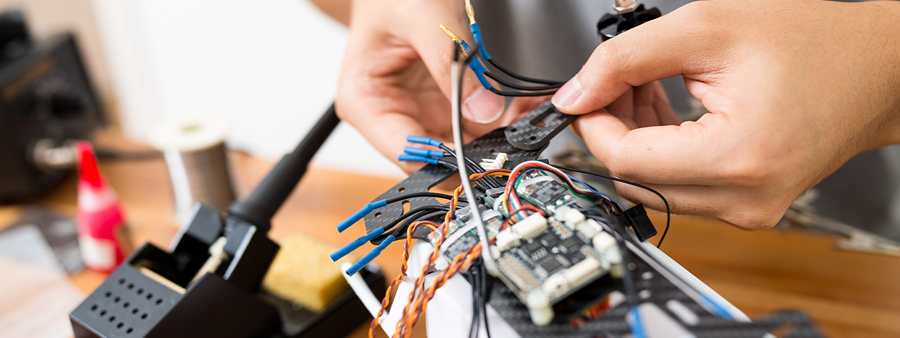

Can you explain about the kind of equipment you are using, and how you are creating the physical product?

In terms of the physical elements, we will need a frame for the glasses, which we are going to 3D print within the facilities at University. We will use Raspberry Pi 3 for our software to create a camera module as a simple button, as well as a speaker module. The physical parts were reasonably cheap – it's mainly the software that can be complex. We are using Python to code and run it to Google Cloud Vision API (Application Programming Interface) – this allows us to analyse images and identify them into text with machine learning. We can then use another API to convert the text we receive into speech.

What challenges have you had to overcome?

We have struggled with navigating around using the APIs as the quality of the software isn’t great, so it’s been difficult to figure this out. There’s a lot of business jargon that can be difficult to understand, but we managed to learn and work around this. It’s been a lot of running into issues and having to find creative solutions! We've done a great job at combatting these issues, though.

Where do you envision the future of this project?

Ideally, we would like to branch out with other uses for our glasses. We have had ideas to use the text-to-speech element to help translate different languages as you are trying to read them. We would develop an app so you can choose which language the glasses need to convert.

We don’t know much about Innovation Festival as this will be our first time at the event. We are excited to see everyone else's showcases of cool technology and see what kind of ideas others have managed to come up with.